Download Pack

This pack contains 192 VJ loops (126 GB)

https://www.patreon.com/posts/88599939

Behind the Scenes

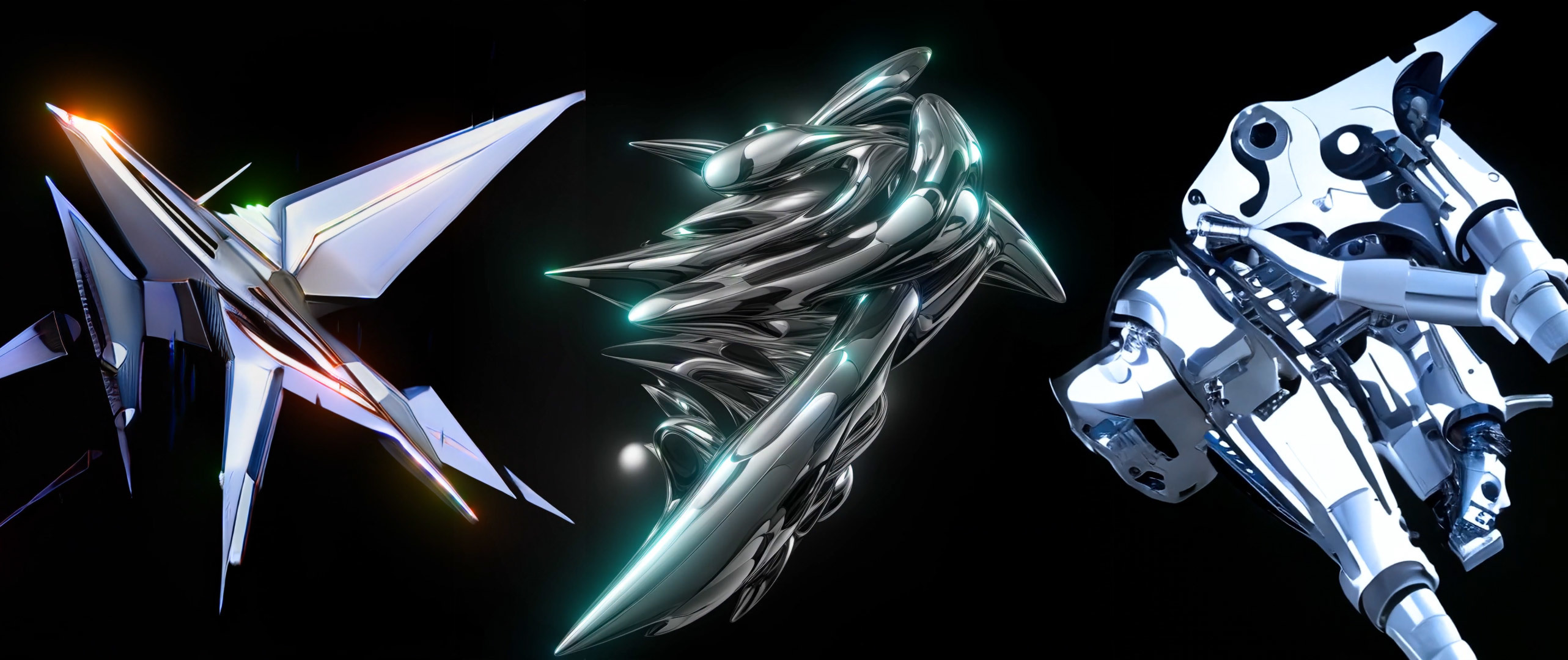

Shiny polished metal is seemingly anathema to mother nature, which is why it symbolizes digital tech so well.

Recently the ZeroScopeV2 models were released. After some poking around I was able to get it working in A1111 using the 'txt2video' extension. First I experimented with the recommended approach of doing text-to-video using ZeroScope-v2-576w and then uprezzing using ZeroScope-v2-XL. Due to the design of these models, the entire frame sequence must fit within my 16GB of VRAM and so I'm able to only generate 30 frames per video clip! And I can't string together multiple renders using the same seed. Ouch, that's a serious limitation but luckily there are just enough frames to work with and create something interesting. So I rendered out several hundred video clips using different text prompts and then took them into Topaz Video AI where I uprezzed them and also did a x3 slomo interpolation. This allowed me to reach about 1.5 seconds per video clip at 60fps. Then I brought all of the video clips into AE, did a bit of curation, and lined up the video clips back-to-back. This is exactly this type of limitation that I find to be creatively refreshing because I would not normally edit together tons of 1.5 second video clips, but this technique forced me to reconsider and the result is a large amount of short surreal visuals on a given theme with an intense feeling of the fast cuts. Sometimes embracing stringent limitations can be a useful creative game.

From there I was curious of what would happen if I skipped the text-to-video ZeroScope-v2-576w step and instead just injected my own videos into the ZeroScope-v2-XL model. So I took a few of the SG2 chrome videos, cut it up into 30 frame chunks, and injected it into the ZeroScope-v2-XL model. Holy mackerel, what utter gems this method created and were exactly what I'd been daydreaming of for years. Just like SG2, it seems that ZeroScope does really well when you inject visuals that already match the given text prompt, likely since it allows the model to really just focus on a singular task without get distracted. I'm guessing SG2 videos with their particular morphing style, plus on a black background, allowed ZeroScope to reimagine the movements in a weirdly different way.

I had been curious about the RunwayML Gen-2 platform and so I gave it a try. I tested out the text-to-video tool and generated a few surreal videos that were 4 seconds each. The amount of movement in each video was minimal yet maybe that could be refined with some tinkering. But the main limitation was having to use credits to first nail down an ideal text prompt and then render out about 100 clips, without any batch function. This limitation really hamper my creative process and hence why I always prefer to run things locally on my own computer. Also I was interested in trying out the video-to-video tool but by my estimations it was going to cost too much to create what I had in mind and be very tedious to manually send each one to render.

For a while now I've dreamed of being able to make variations of an image so that I could create a highly specific dataset in a tight theme. Last year I had tested out the Stable Diffusion v1.4 unclip model but it didn't produce desirable results for my abstract approach. So I was quite curious when I learned about the Stable Diffusion v2.1 unclip model release and immediately got some interesting results. Amazingly no text prompt is needed! Just input an image and render out tons of variations. Comparing the original image to the image variations, there was a clear resemblance even in regard to the pose, material, and lighting of the subject.

So I selected 192 abstract chrome images that I created for the Nanotech Mutations pack and then had SD2.1-unclip create 1,000 variations of each image using A1111. After that was rendered out I ended up with 192,000 images that were grouped into 192 different pseudo themes. I thought that this dataset would have enough self similarity to start training StyleGAN2, but the model had lots of trouble converging into anything interesting in my tests. So when I looked at the big picture of the whole dataset then it became clear that actually there was too much variation for SG2 to latch onto. I looked into training a class conditional model using SG2 until I realized that I wouldn't be able to interpolate the various classes, which was a bummer. But since each of the 1,000 image groups could be manually selected, I went through the dataset and split up the images into 6 different smaller datasets that definitely shared the same theme. Golly that was tedious. From there training 6 different models in SG2 converged nicely since each of the datasets were concise.

I thought it would be interesting to take the chrome SG2 videos, render them to frames, and then inject them into SD v1.5 and experiment with the stop motion technique. It was fun to try out different text prompts and see how they responded to the input images. Then I remembered back to a very early experiment where I rendered out 3D animation of a person breakdancing in Unity and had tried to inject it into Disco Diffusion but wasn't thrilled with the result. So I grabbed the breakdancer renders and injected it into SD v1.5 and loved how it sometimes looked like warped chrome metal and other times looked like a chrome plated human.

Recently Stable Diffusion XL was released which I had been excitedly anticipating. But since it's so brand spanking new, A1111 didn't yet support the dual base/refiner model design. But I could load up a single model into A1111 and experiment with it in that way... Which is where I had a happy accident idea. Why not try out the stop motion technique but use the SDXL refiner model directly, especially since this model is purposefully built for uprezzing to 1024x1024. The results were even better than what I would pull off using SD v1.5, likely due to the SDXL refiner model being trained differently. Also the difference between working at 512x512 and 1024x1024 in SD is dramatic and so many more details are included. I have many ideas to explore with this newfound technique. Plus I'm curious to see how Stable WarpFusion looks with this new SDXL model.

There are chrome machines and green vines intermingling on the distant horizon. It's a mirage of the future but some version of it approaches all the same. The golden age of algorithms is at its crest.

Discussion (1)

You have to pay at least US-$5 so these packages are by no means completely free! Or better nothing is free hear!!

I tried to get the download link from patron.com but it didn't work for free.

bennoH. 🐼

Mindestens 5US-$ muss man bezahlen komplett kostenlos sind diese Packete also keineswegs! Eigentlich ist gar nichts kostenlos zu haben hier!!

Habe versucht über patron.com den Downloadlink zu erhalten aber ging nicht kostenlos.

bennoH. 🐼