(Full disclaimer, I have had a coding block for over a decade, despite having a scientific background (I managed to avoid coding in my PhD, something not to be proud of hindsight). Thanks to VJ union, AI art and shadertoy I've only overcome it recently. I'm running various AI scripts in python, programming some simple shaders, learning touchdesigner for over a year. Now I plan to take the game ahead over the next year and learn blockchain programming (smart contracts, APIs), machine learning (making my own models, testing and debugging algorithms), unreal engine and smode.)

So in the last article we discussed various apps and as many have noticed it is a common theme to use free usage and user input to develop models commercially and charge money. This has been industry standard for very long (the usage of ads and user data for example by Google and Facebook is a very standard example usage of machine learning). As Grigori mentioned in another article, Stability AI has been a big game changer in this scenario.

A little background on stability, there has been a bigger debate on the ethics and licensing side on what is the correct way to proceed with AI research. Open AI was a big player which tried to do this correctly but then went full commercial. https://www.eleuther.ai is the only other contemporary community from that time that works on a big enough level while remaining non-profit. Though there is of course hugging faces and some public university labs doing great innovation.

So what is the big deal about stability? Why is stable diffusion revolutionary and a game changer? Well stability made enough of an impact that facebook recently released Llama language model for free commercial usage, no conditions attached (not exactly open source, though the intricacies and direction of AI licensing is currently beyond my expertise and worthy of an article by itself). This is vast improvement over chatGPT and many others. No use cases yet but you can test it for free (if you have space to download a 400GB model or you can run it on xethub.com if you are an expert). Microsoft and google have also made moves over the year. Now these are some serious real abusers of AI ethics (this is worthy of another article). I wouldn't say Terminator scenario is completely out of question yet....xD

Before Stable Diffusion came along, AI art and generative research was a hobbling mess, involving keeping track of 100s of scripts, figuring out how to run them...in other words hardly accessible. Your author spent 6 months just learning a few .... disco diffusion, pytti...before they all became obsolete. Along comes stable diffusion, and within a year you have 100s of apps and websites and what not. The completely open source availability of what stability develops is a big part of this as well as Emad being a very visionary intellectual.

Why should you run things locally apart from the cost benefits? Well for one you can see what is actually happening, instead of a mysterious genie conversing to you. The large language model (LLM) that OpenAI released 2 years ago is of course a big part of pretty much all the innovations we are seeing. There is of course already exhaustive information on how stable diffusion, diffusion in general and prompt engineering work. https://stable-diffusion-art.com

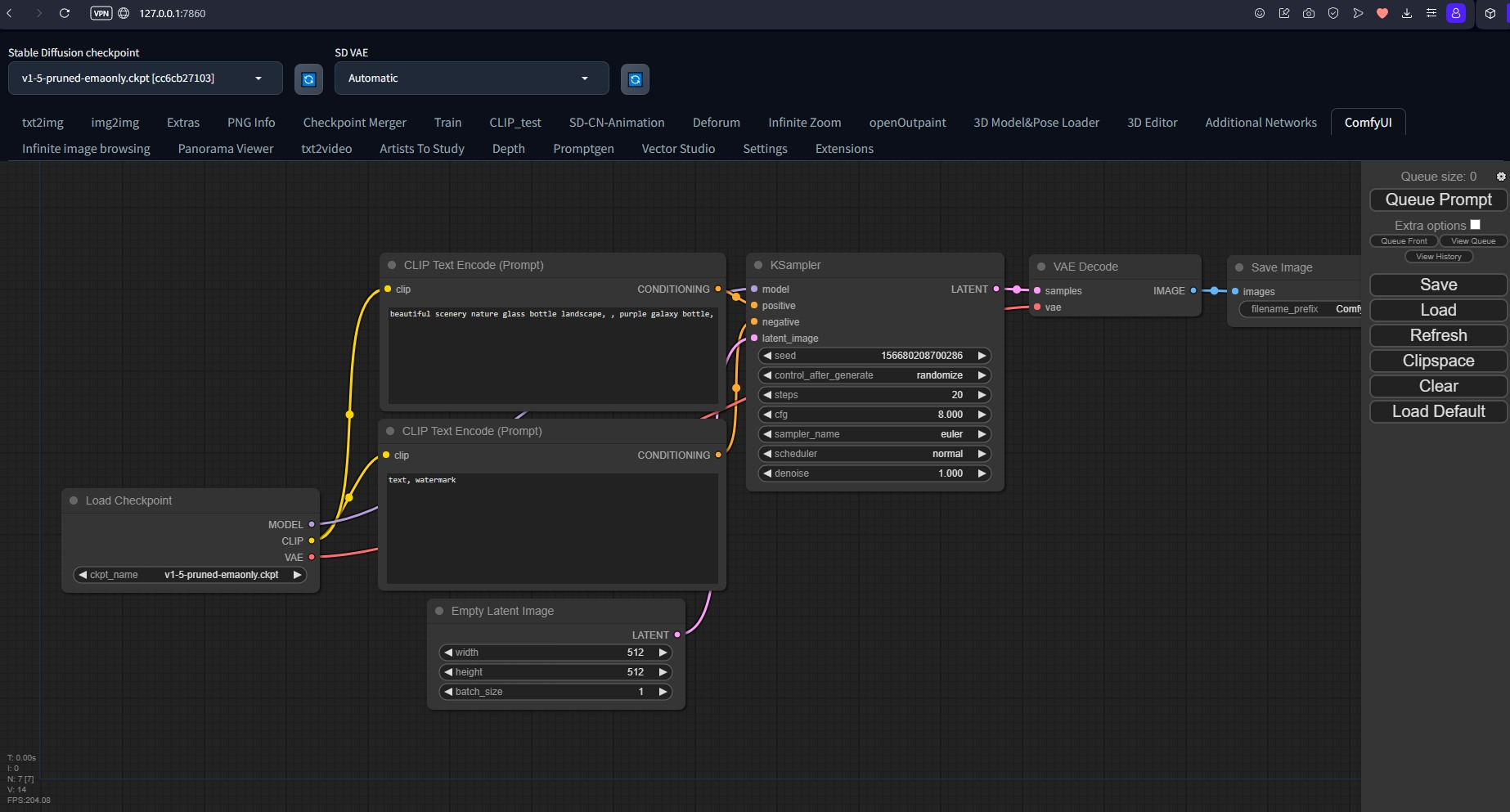

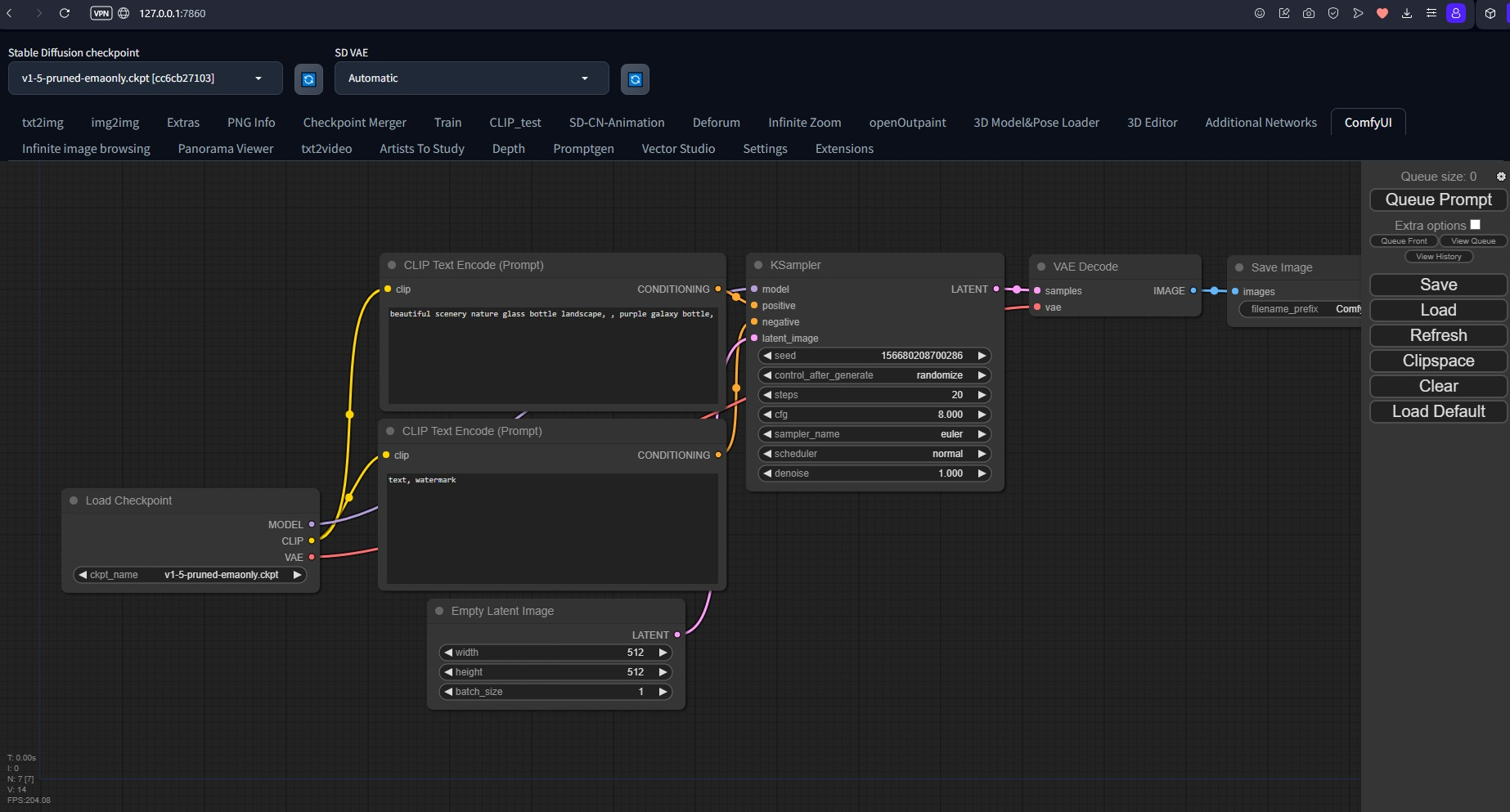

So moving on, let me introduce you to SD web UI, an all in one swiss army knife (see post image) for most of your visual AI needs. No more going crazy building different python installs and plugins for blender, unreal, etc and what not. As of yet SD web UI does not have these plugins, but it does for photoshop and ebsynth, and loads of goodies. Think of it as a spout for AI, is what it could be in long run.

https://github.com/AUTOMATIC1111/stable-diffusion-webui/

https://aituts.com/sdxl/

There was recent article on ComfyUI and AInodes. Let me tell you that ComfyUI completely works inside the web UI as my title image suggests. AInodes has a bug so it is not working on windows yet for me. Well it is nevertheless by the developers of Deforum (what kaiber.ai and most stable diffusion videos run on) which runs perfectly well inside the webUI, I found it lot easier than the deforum colab notebook. The webui has lots of intimidating options, but still it is not hard to learn and you do not need to even look at any code when you are inside it. Lot of community support and tutorials on youtube https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Features

I've been a real SD web UI junkie lately, I can run deforum, I can train my own models, I can make vector images directly from prompts, I can work with 3D depth, 3D models, poses, 360. To not to even begin talk about the parseq sequencer, another amazing reason to work with python https://sd-parseq.web.app/deforum

There is a host of plugins and extensions for the webUI, you can find the entire list https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Extensions https://colingallagher.me/2023/04/08/top-5-web-ui-plugins.html

https://github.com/AUTOMATIC1111/stable-diffusion-webui-extensions

You can see in the screenshot above which ones I have installed. Now if you do a local install make sure it is a location with full permission, I had to use an external drive due to the massive sizes of the various models, and I have to pretty much run everything manually, including updates... an interesting exercise in python best skipped as it is easier to install and update everything from within the webui. This week I'm also going to test everything in colab. Need I mention here that I have only a RTX3050 with 4GB VRAM, I cannot do pro work with that, but it is enough to learn and test everything. So do not let it hold you back. Apart from running simultaneously with unreal/td, doing high res videos, training your own models, testing the new SDXL model you not gonna miss much even with 4GB. Of course it is well worth getting to know colab even if you do have a full 16GB GPU or even better a maxed out 64GB M1 (most of these scripts now work with M1, I have not had the opportunity to test on M2 yet but will do for one of my students in the coming weeks). A much better option than letting all these online apps milk you. For the basic 10$ per month you can use a 16GB GPU 4-5 days a week (translate into hours), you do need a premium google drive subscription if you go really crazy on AI. For 40$ colab subscription you can get access to the whopping 40GB A100 GPU occasionally, a real beast I must say.

So here is the colab notebook for the webui with a little tutorial (umm there are advantages and disadvantages I will try to improve my understanding and write about that next month.)

https://deeplizard.com/lesson/sda3rdlazi#:~:text=It%27s%20important%20to%20note%20that,UI%20under%20the%20free%20version.

We are almost at the end, as a passing note I did want to break down the tech behind Skybox, LeiaArt and text to video apps like Gen2, pika labs. If you checked my image, You can I already have the text to video (https://github.com/kabachuha/sd-webui-text2video

text to video models are insanely heavy on ram and very much at initial stage but if you got M1 damn lead the way you pioneer you) and 3D depth models setup. That is pretty much it.

Skybox is a bit more complex but still works on stable diffusion. It is based on a series of 360 research done in SD version 1.5, a custom model was developed with intel and the data is actually processed in touchdesigner to create 360 views. There is actually a new 360 model someone developed with SDXL, and SD base version is already at 2.1, so it is really insane how the field is advancing.

https://www.intel.com/content/www/us/en/newsroom/news/intel-introduces-3d-generative-ai-model.html

https://huggingface.co/ProGamerGov/360-Diffusion-LoRA-sd-v1-5

https://3dmv2023.github.io/assets/posters/21.pdf

Oh as a closing note, came across these really interesting ones, worth a check :

https://www.3dgenerative.ai/#howitworks

https://www.reallusion.com/character-creator/download.html (I have not looked into animating 3d models or images with audio, or whether it is achievable with an existing webUI extension, though theoretically it makes sense to build a plugin for this)

https://github.com/nerfstudio-project/nerfstudio (your go to for NERF research, I have not tested it yet)

Discussion (4)

If you have an old GPU with 4Gb of Vram, I highly recommend to use ComfyUI as it's much faster than Automatic1111 and InvokeAI. Also ConfyUI is very powerful really easy to install. Don't waste your time and energy try ComfyUI: github.com/comfyanonymous/ComfyUI

I am running ComfyUI inside automatic11111, and also running automatic itself and all its extensions on 4GB. ComfyUI is very easy to install yes but it is not easy to understand. Automatic is easier to understand but tricky to install (I'll make a list of common errors and solution in a week or 2), moreover it allows you to access to incredibly useful stuff like Deforum, ControlNet, text to video, depth maps, pose estimation ,vector studio (to make vectors directly from prompts), prompt generator, artist database, training your own models, 360 diffusion amongst others. Also has plugins to directly incorporate photoshop and ebsynth. The main goal of recommending automatic is to have complete control on most AI vision related stuff for creatives. If one merely wants to use Stable Diffusion for images and play around with modular workflow then you can use ComfyUI (it doesnot even support videos yet). Perhaps AInodes will work as an inbetween but it has lots of bugs at the moment.

It looks like there is an EASY way to install many kinds of AI stuff, Pinokio is a browser that lets you install, run, and programmatically control ANY application, automatically. pinokio.computer/

youtu.be/Gunh6VoEJuU

It's possible to use a sequence of images as img2img with comfyUI, there are tons of extra nodes you can find