Download Pack

This pack contains 52 VJ loops (40 GB)

https://www.patreon.com/posts/79406058

Behind the Scenes

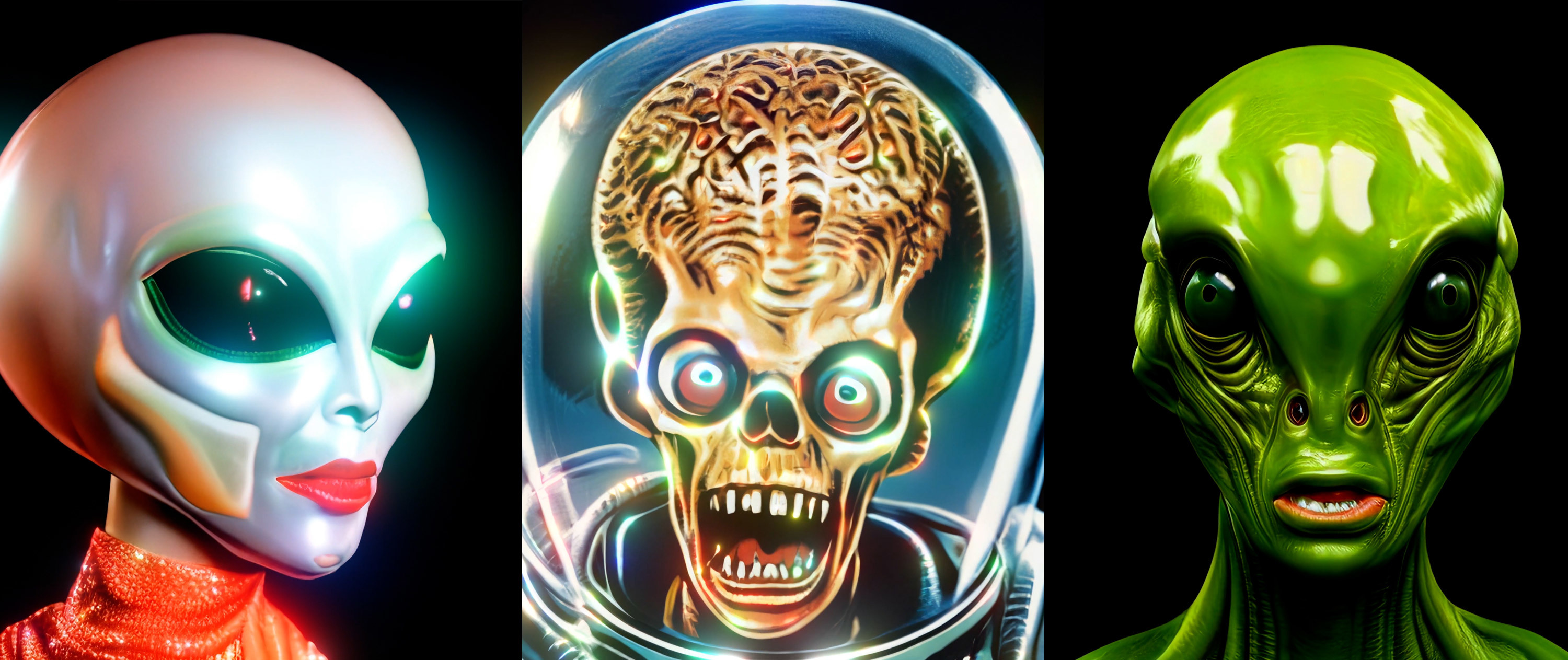

We are aliens riding on this strange spaceship earth. But let's visualize some real aliens, eh?

For the "Wrinkled" scenes, I was able to create a SD text prompt that would reliably output images of a wrinkly alien in several varieties. But I wanted SD to only output bust portrait style shots, which it struggles with. In recent experiments I knew that if I input an init image at 10% strength then that would give just enough guidance in the of the starting noise state, yet enough creative freedom to still extrapolate lots of fresh images. So I output 100 human face images from the FFHQ-512 SG2 model, input it into SD, and rendered out 31,463 images from SD. Then trained SG2 on it and it converged without issues.

From there I wanted to output some green aliens against a black background, which is currently something that is a tricky request for SD. So I grabbed a human face image from the FFHQ-512 SG2 model, crudely cut it out, tinted it green, and filled in the background to be pure black. I used this for the init image and generated 8,607 images. I then trained SG2 on the images and it converged very quickly since the images are very self similar. And I realized this dataset was a good candidate for training SG3. Since SG3 is sensitive to a dataset having too much variety while also taking twice as much time to train, I often shy away from experimenting with SG3. But I knew it would converge quickly and I ended up being correct.

For the "Grays" scenes, I was trying to create big eyed aliens wearing haute couture fashion. This was the initial inspirational starting point of this pack, but I was having trouble creating a text prompt to do this reliably, even when using 20 init images of humans with a black background. But I was really excited about the few good images among the chaff. So I output somewhere around 40,000 images and then had to manually curate it down to 11,952 images. This was a tedious task and reminded me of why it's not really worth the mental drudgery and yet I love the end result, alas. I then trained SG2 on it for multiple days, likely one of the more heavily trained models I've done yet. It had a bit of trouble converging due to the huge amount of different aliens visualized in the dataset, but it finally did converge mostly on the grays typical type of popular alien since it was visualized the most in the dataset.

For the "Big Brain" scenes, I really wanted to visualize some angry screaming aliens with big brains. So after much toiling I finally nailed down a text prompt and output 8,631 images out of SD. Training SG2 converged overnight easily since the images were quite self similar. The added slitscan effect really make it feel as though the alien is falling into a black hole.

From there it was all compositing fun in After Effects. First I used Topaz Video AI to uprez the videos using the Proteus model using Revert Compression at 50. Then I took everything into AE and experimented with some fav effects such as: Time Difference and Deep Glow. Also I can't remember where I saw it but someone had created a unique boxed slitscan effect and I wondered how they technically did it. I've long used the Zaebects Slitscan AE plugin for this effect, but it renders very slowly and can only do vertical or horizontal slitscan effects. So I starting hunting for alternate techniques using FFMPEG and instead stumbled across a tutorial of how to use the Time Displacement native to AE to achieve the same slitscan effect! This ended up being is a huge savings in terms of render time since it utilizes multi-frame rendering. Ironically I still only did vertical or horizontal slitscan effects, but in the future I have all sorts of wild ideas to try out, such as hooking in a displacement map to drive it. Also I explored some other native AE effects and found some amazing happy accidents with the Color Stabilizer effect, which allows you to select 3 zones within a video for it to watch for pixel changes and then control the black, mid, and white points of the Curve effect. So it's a electric glitchy feeling that I really love and is perfect for making these aliens have that extra wild warpy feeling.

The unleashed creative imagination of Stable Diffusion is mind boggling. Having generated a bunch of 10,000 to 50,000 image datasets using SD and then manually curated through them, it's clear to see how much variety it's capable of within a given theme. I can't help but think of all the permutations that humanity has never fathomed and that SD now hints at. SD has learned from so many different artists and can interpolate between them all. As the tool matures, I can easily see how it will mutate many different disciplines.

Even after tons of testing, I've long been perplexed by the Gamma attribute of StyleGAN2/3. So I reached out to PDillis again and he explained a helpful way to think about it. Consider the Gamma a sort of data distribution manipulator. Now imagine your dataset as a mountainous terrain and all of the peaks represent the various modes in your data. So the Gamma will make it easier for the neural network to distinguish or average them into one. For example, looking at the FFHQ face dataset, there would be lots of various groups in the data: facial hair, eyeglasses, smiles, age, and such. So setting a high Gamma value (such as <80) will allow these details to average together into a basic face (2 eyes, nose, mouth, hair) and the Generator will become less expressive as it trains. Setting a low Gamma value (such as <10) will allow more of the unique aspects to be visualized and the Generator will become more expressive as it trains. Yet if you set a low gamma too early in the training process then the model might not ever converge (remain blobby) or perhaps even collapse. So when starting training you should set the gamma to be quite high (such as 80) so that the Generator will learn to create the correct images. Then when the images from that model are looking good, you can resume training with a lower gamma value (such as 10). For me this makes intuitive sense in the context of transfer learning since first you need the model to learn the basics of the new dataset and then at that point you can refine it. I think often 10 is a fine starting point when your dataset is homogenous. But if your dataset is full of unique details and difficult to discern a common pattern in all of the photos, then raising to 80 or more is necessary. So that explains why my models have recently increased in quality. A huge breakthrough for me, so again many thanks to PDillis.

I also learned of the vital importance of having at least 5,000 images in your dataset, preferably 10,000 images. Now that I've been using Stable Diffusion to generate images for my datasets I've been able to see what a huge difference it makes in the training process of StyleGAN2/3. In the past I was often using datasets that were between 200 to 1000 images, but that was frequently resulting in having to stop the training prematurely since the model becoming overfit. So it's very interesting to finally have enough data and how that affects the end result.

I had originally intended on doing some 3D animation of a UFO flying around and traveling at warp speed, so in the future I must return to this theme. I want to believe!

Discussion (0)